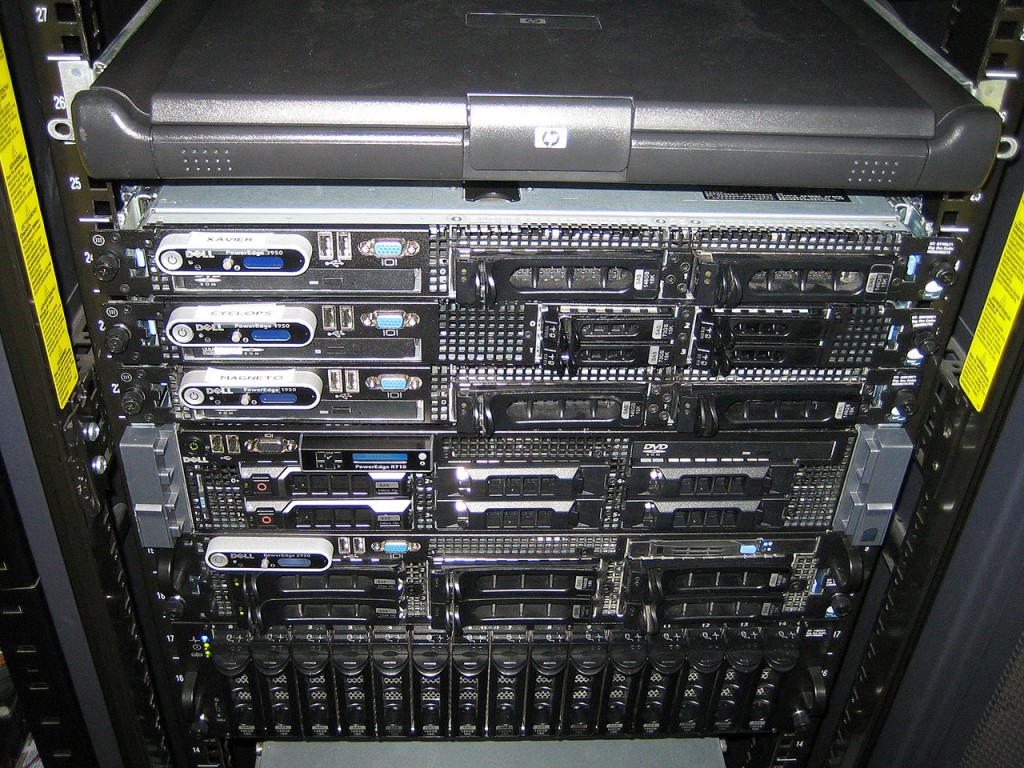

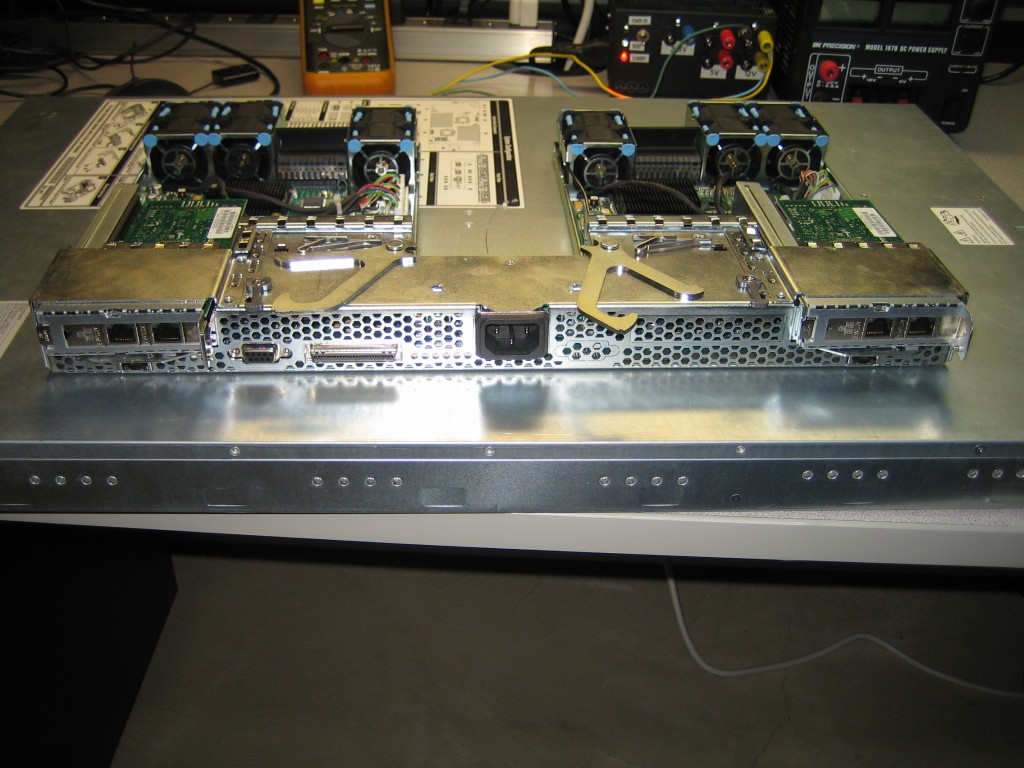

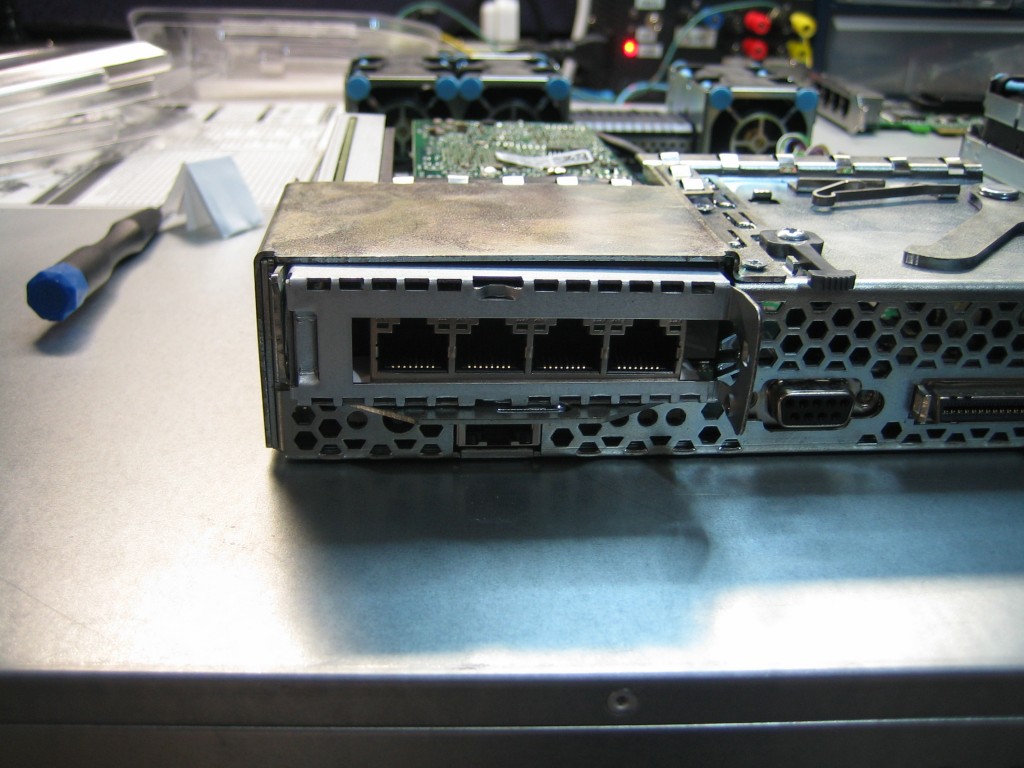

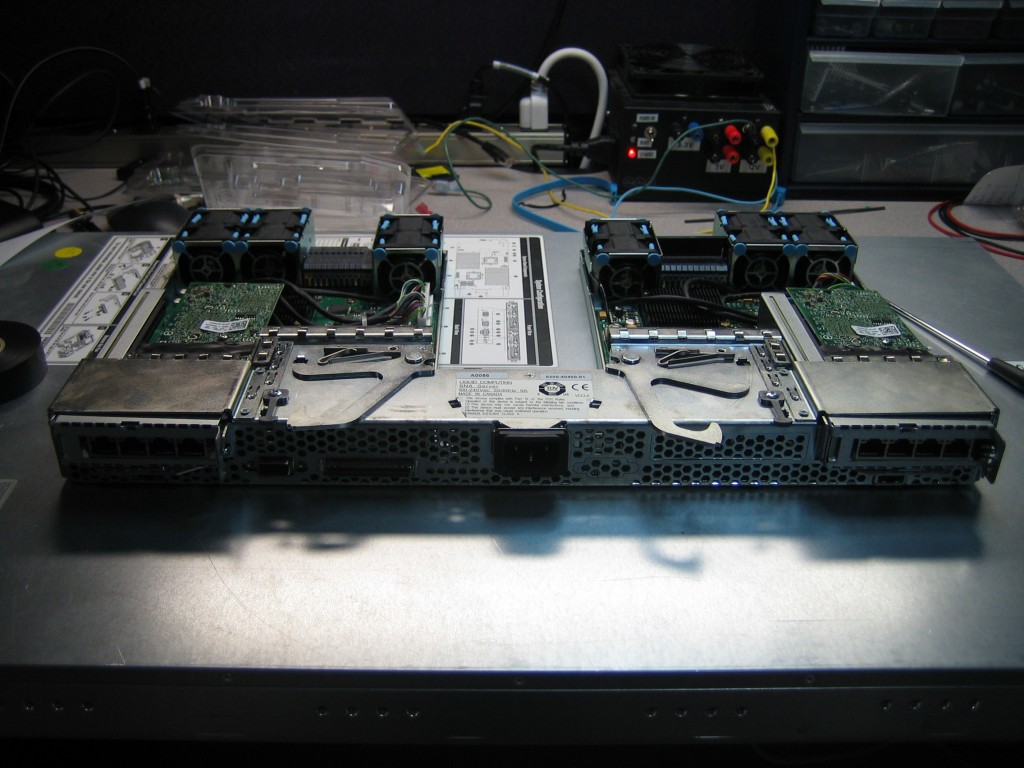

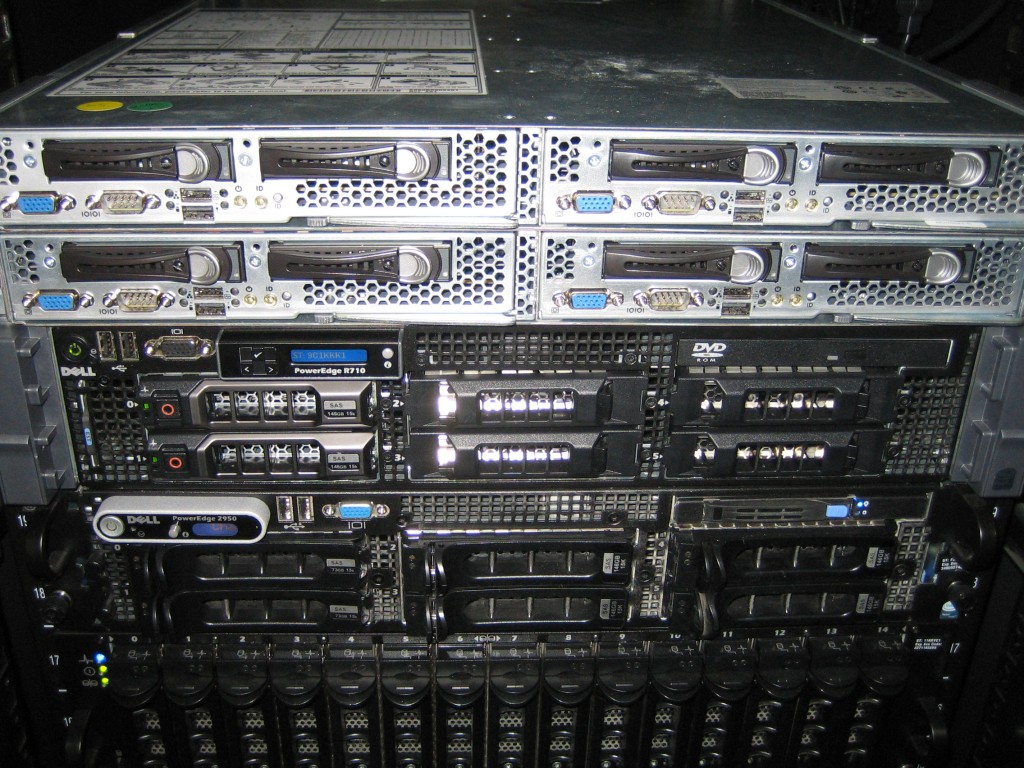

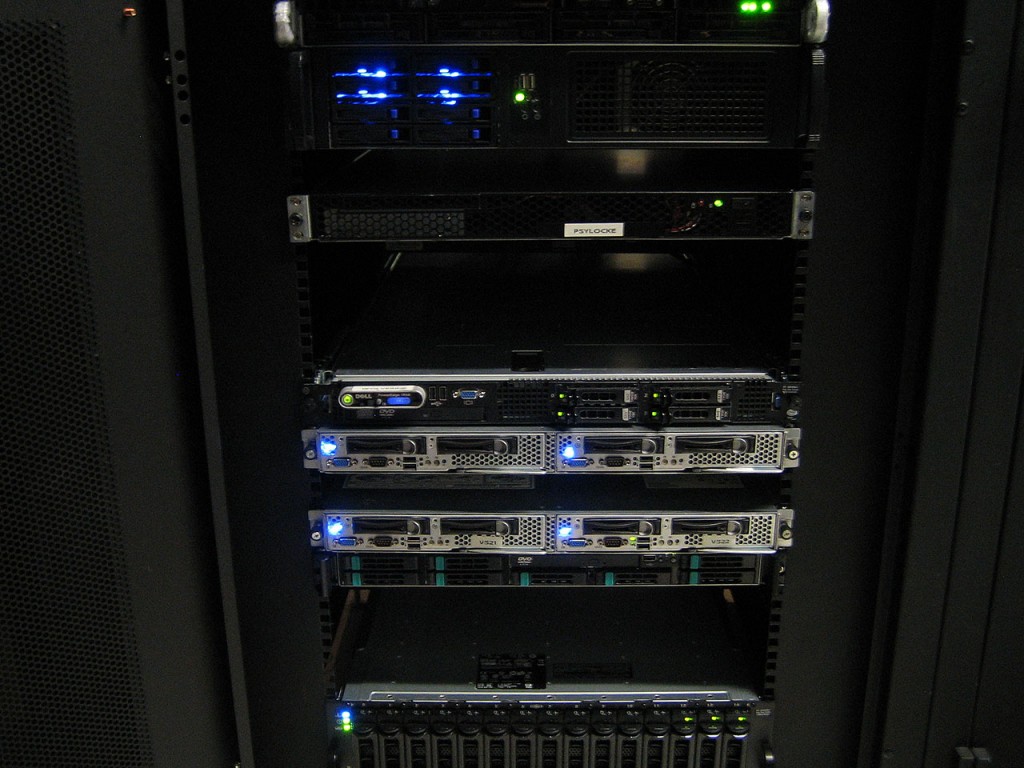

The time has come to rack up the servers at the data center. The plan is to decomission the old Dell PowerEdge 1950’s and replace them with these Intel SR1680MV servers.

Out with the old. The PE1950’s have been real workhorses. Sure they top out at 32GB of RAM, but back in Windows 2003 days, this was sufficient to run a lot of instances of the OS, and countless instances of CentOS Linux. I was originally running 2 vSphere Nodes and 1 ProxMox node.

Psylocke is a purpose built firewall. It’s got off the shelf components Intel Motherboard, Intel Celeron 440 and 2GB of RAM. Connections are handled by an Intel Pro/1000 VT Quad NIC. The OS is pfSense 2.0.1. This combination has been shown to easily throughput 500Mbit of inter-zone traffic. pfSense is by far the most powerful free firewall solution I’ve come across.

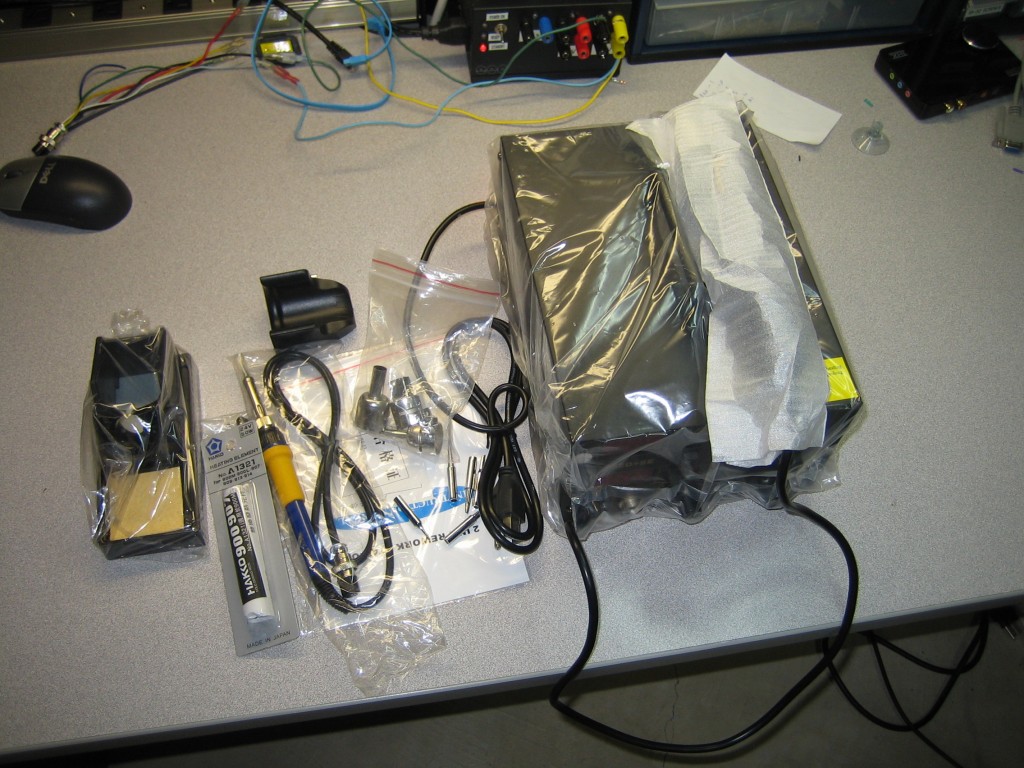

I’m planning to convert a Firebox firewall to pfSense at some time in the future. But that’s another project.

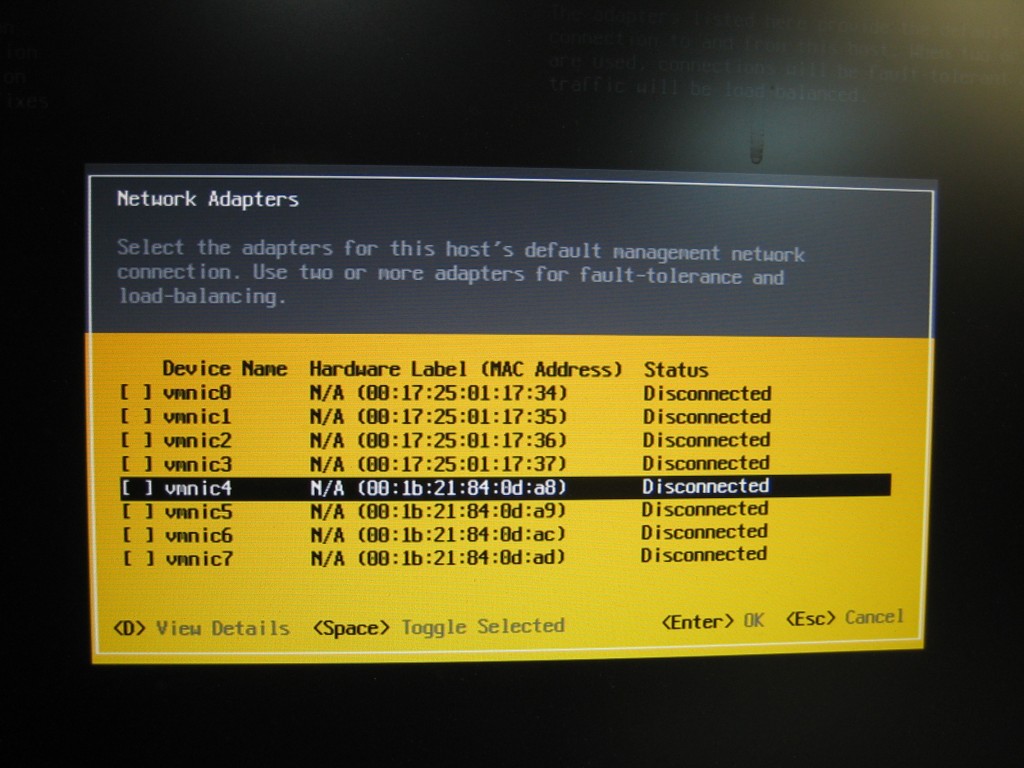

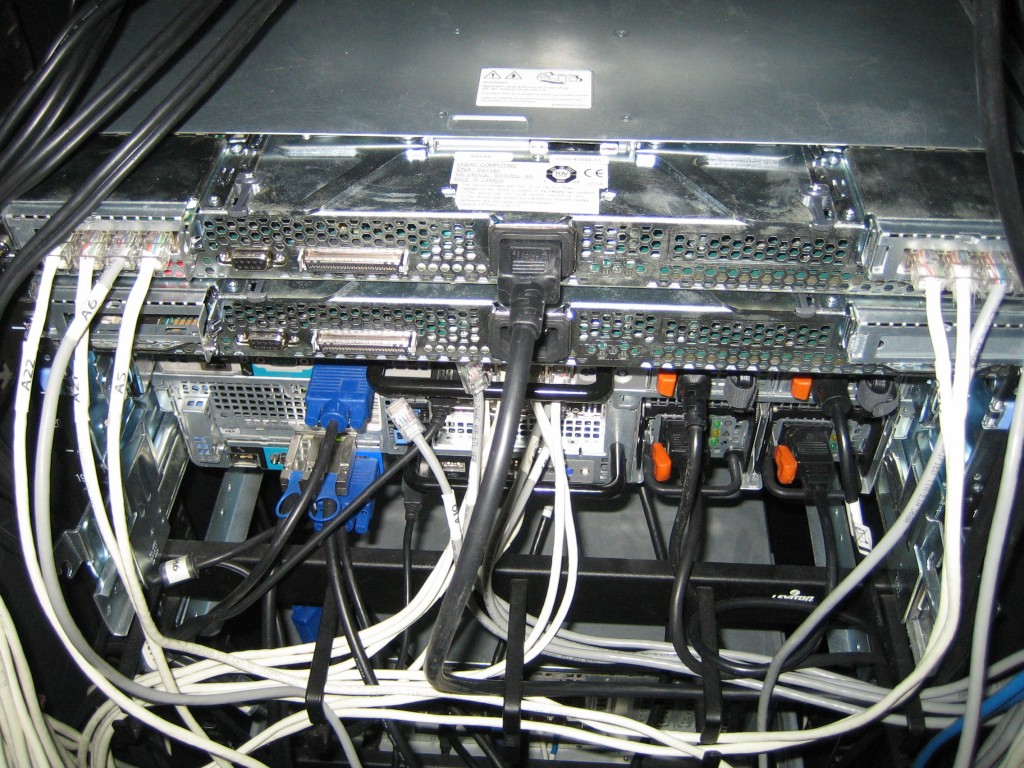

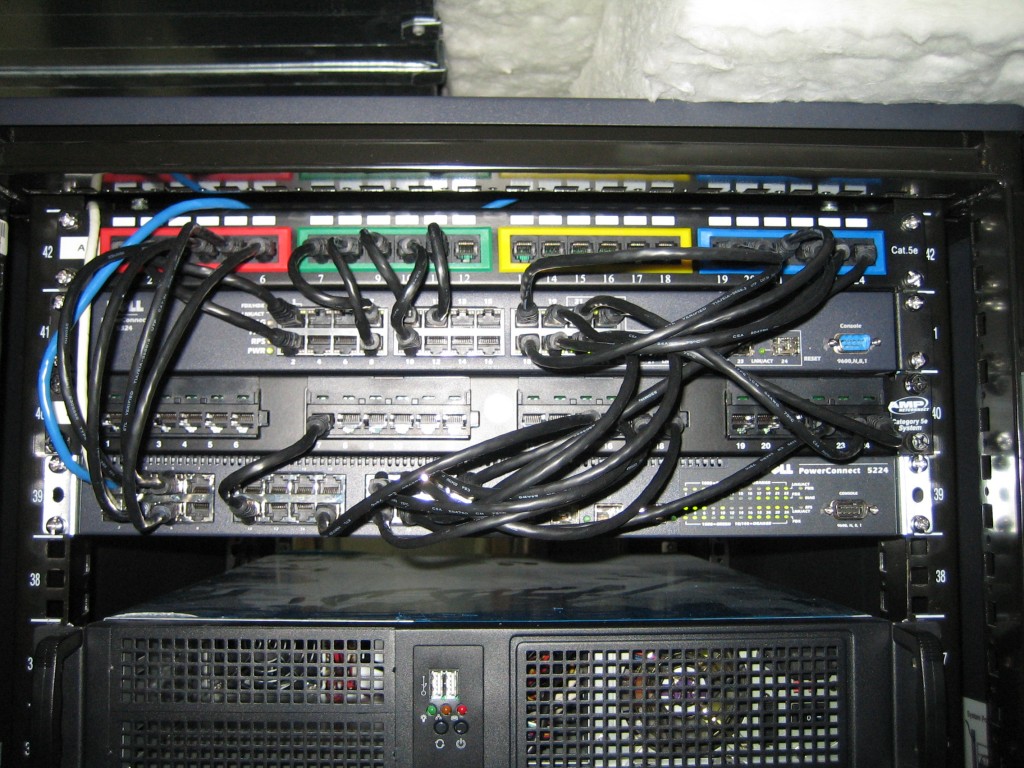

New servers racked up and fired up. I had to reconfigure the servers on site due the fact that some VLANs had to be moved around as I had to rewire some of the switch connections. The file server is another Intel Server. It’s an Intel SR1550 running two E5160 3.0Ghz Xeons with external SAS controller and running NexentaStor 3.1. NexentaStor is Solaris based file server utilizing ZFS for the file system. ZFS provides on-the-fly block-level deduplication and on-the-fly compression. NexentaStor has been proven to be a rock-solid file server solution. With iSCSI MPIO support, balancing bandwidth across multiple NIC is trivial. ZFS also utilizes SSD’s for intermediate cache, causing random access performance to skyrocket due to block level caching.

Now I’ve got myself some spare PE1950’s. Two of them will end up for sale on Kijiji. I’ll keep one of them for the lab at home, at least until I can score another deal on a server like the SR1680MV.

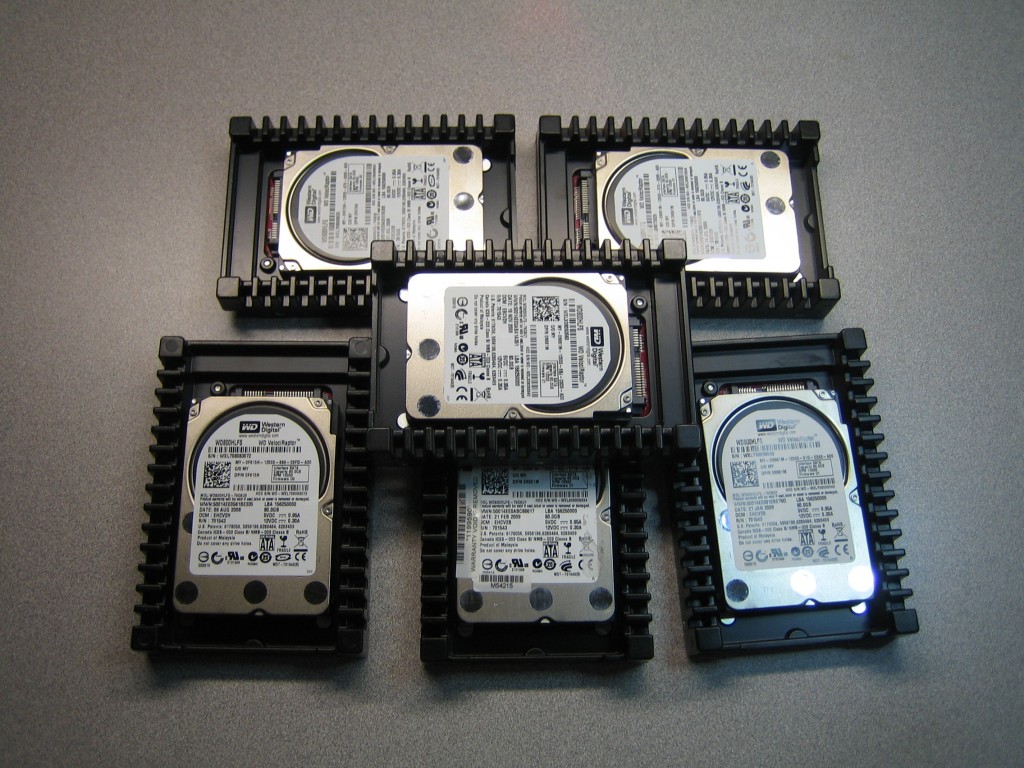

I’ve wasted on time racking up the servers at home. They need a little bit of cleaning since they haven’t been cleaned for over 8 months. I’ll wipe the drives and post them on Kijiji. Hopefully I can still get a decent coin out of them. The 4GB modules that make up the 32GB of RAM are still pretty expensive nowadays.