Picked up this solder station on Kijiji yesterday. Seen those units on eBay prior, figured might as well pick up locally and save myself the shipping fees and the 3 week lead time.

Been reading about these units online for a while. Basically same factory cranks these out under various brand names (Hakko, Yihua, Xytronix, etc).

The premise is all the same. Ability to solder SMD/SMT and of course ability to remove components.

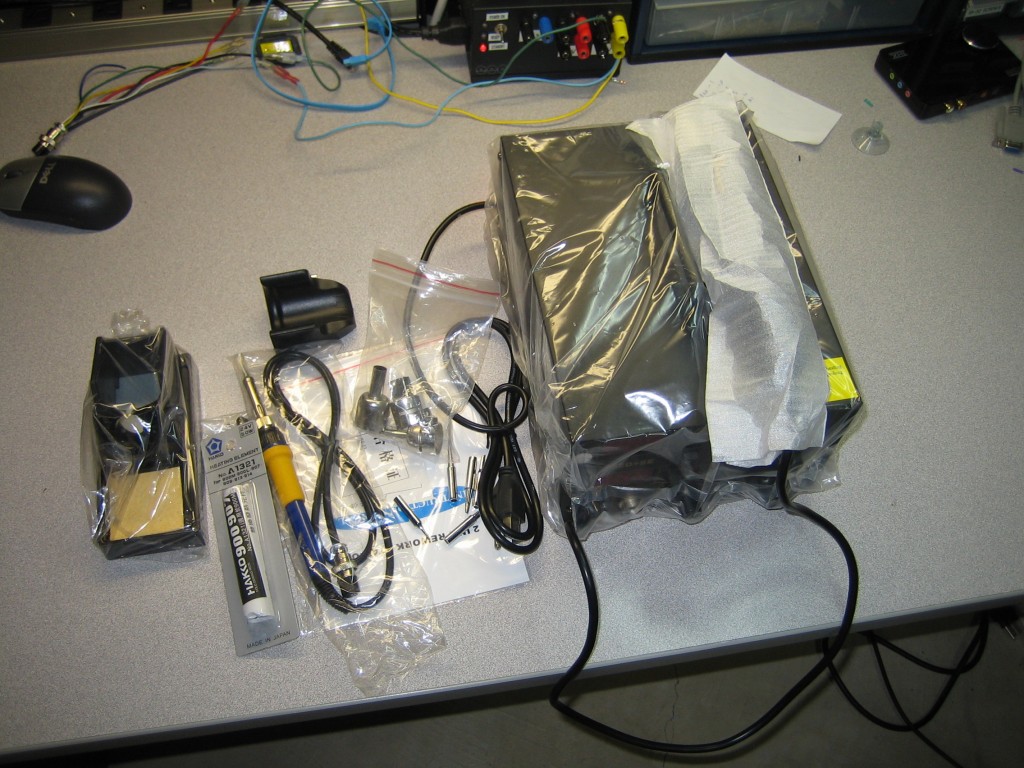

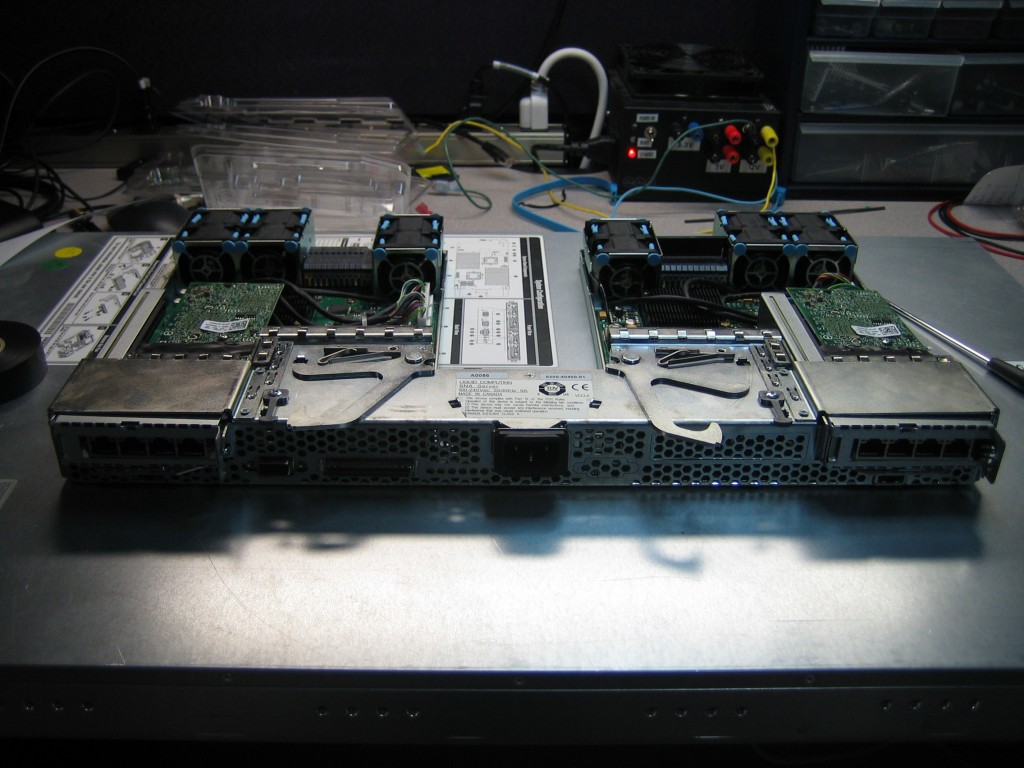

The unit came nicely packaged. The guy I bought it from also threw in an additional ceramic heating element and 5 more soldering tips. Additional focus heads would would be nice, but I’m sure I can find those on eBay for cheap.

The soldering iron itself does feel a bit cheap. Haven’t tried soldering with it yet, so will have a more accurate opinion of it at that time.I wish the soldering iron base was a bit heavier though. I don’t like when they slide around when trying to park the iron it on. Will see if I can weigh it down a bit since the inside of it is hollow.

The air gun is rather nice though. It heats up quickly and moves fair bit of air on high setting. Again, I haven’t tried it on an actual board yet, but I’m really looking forward to it. The air gun does shut off automatically when placed in the cradle which is a nice feature, but considering the source, I’ll be sure to shut off the unit when I’m not using it.

Overall, this is a pretty decent unit for what I costs. Hopefully the soldering tips will last a fair bit, I used to have a cheapie soldering iron and it was going through tips so much that it was just more cost effective to buy a more expensive soldering iron with a tip that lasted a very long time.