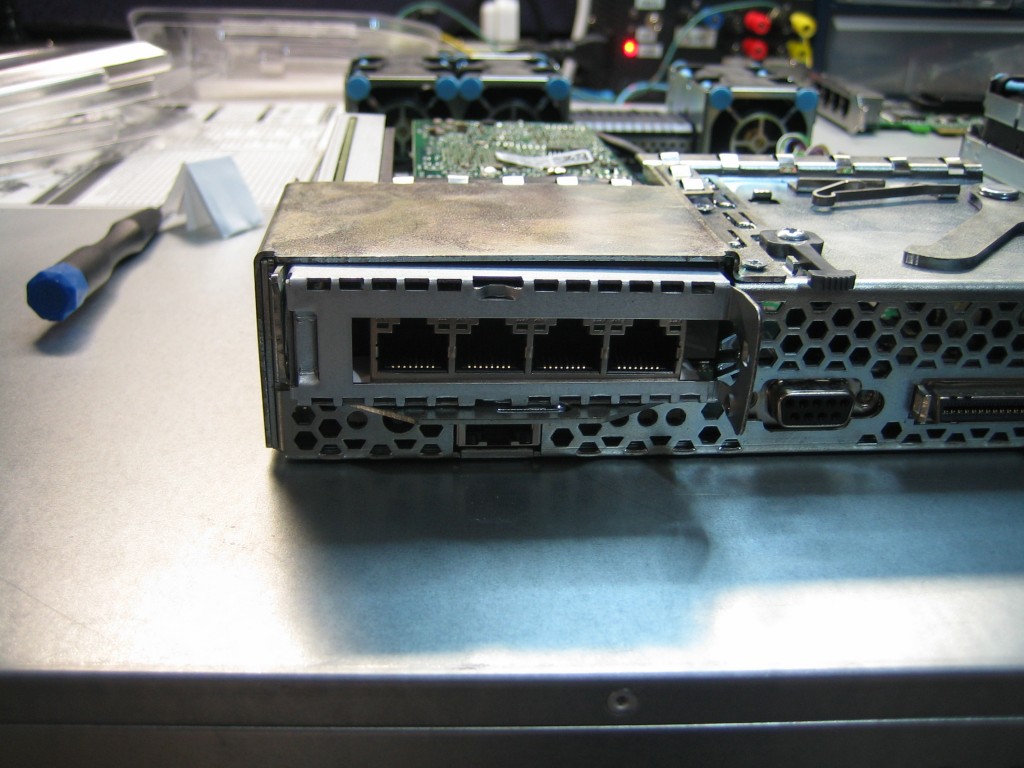

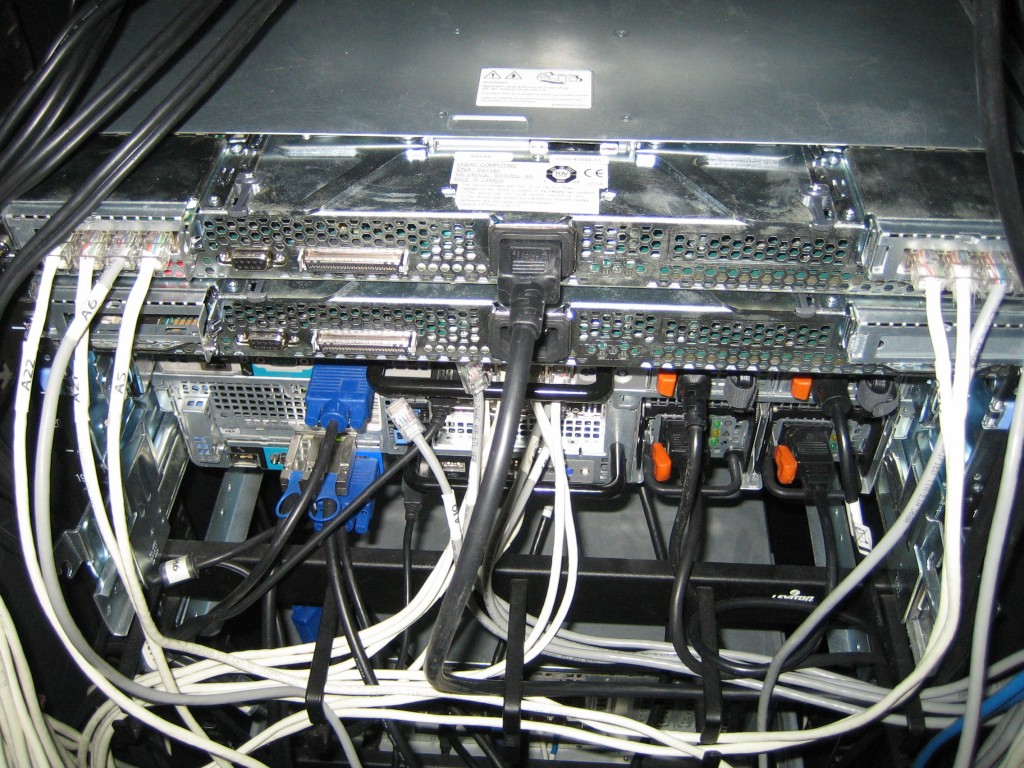

Continued working on the server today. Installed a couple of Intel Pro/1000 VT Quad Port network cards. 2 Ports for SAN, 1 port for LAN and 1 port for DMZ/WAN traffic for each node. The cards I had did not have low profile brackets so I ended up rigging the cards so they wouldn’t move. Not the best fix, but since this server will stay home for Lab work and testing, it’s not really all that important.

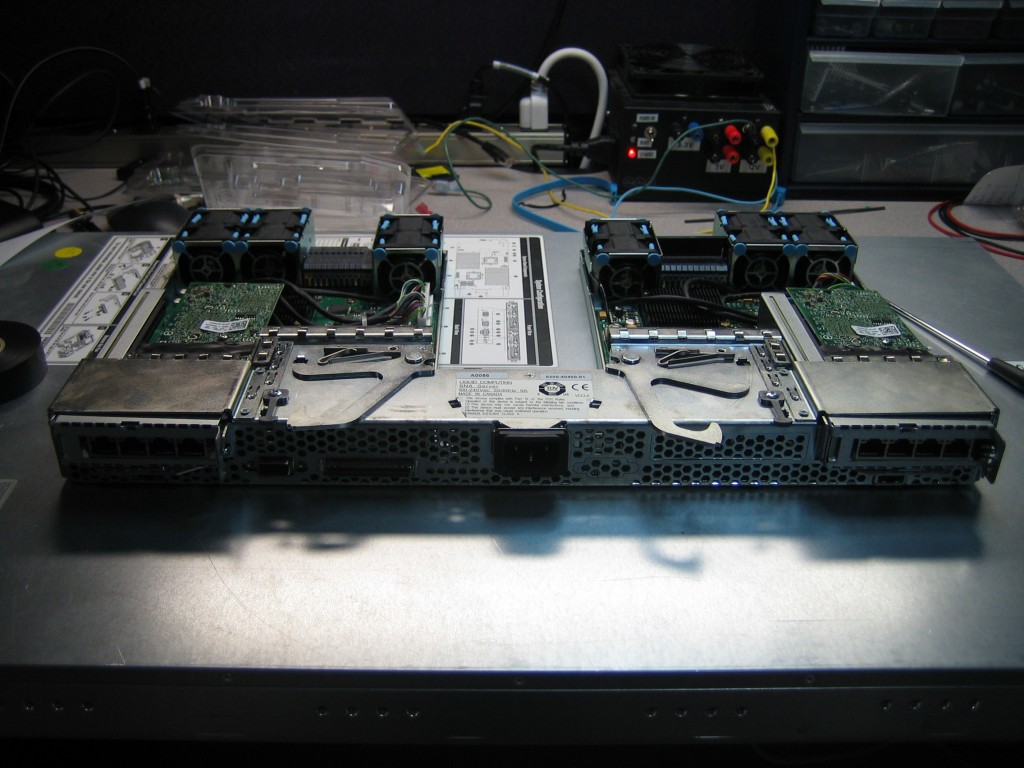

Both cards installed and ready to be plugged back into the server. The server does have rear RJ45 jacks on it, but they do not seem to be used for typical network purpose as they do not light up when hooked up to a switch. From what I read, these servers required a Liquid Computing switch to operate.

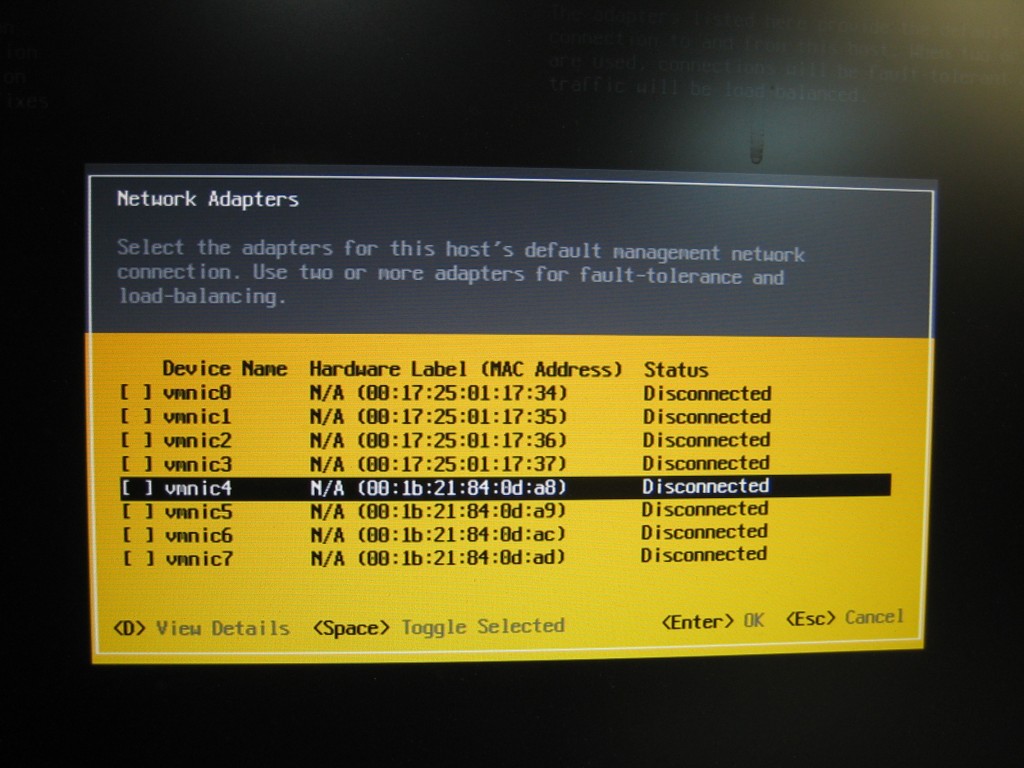

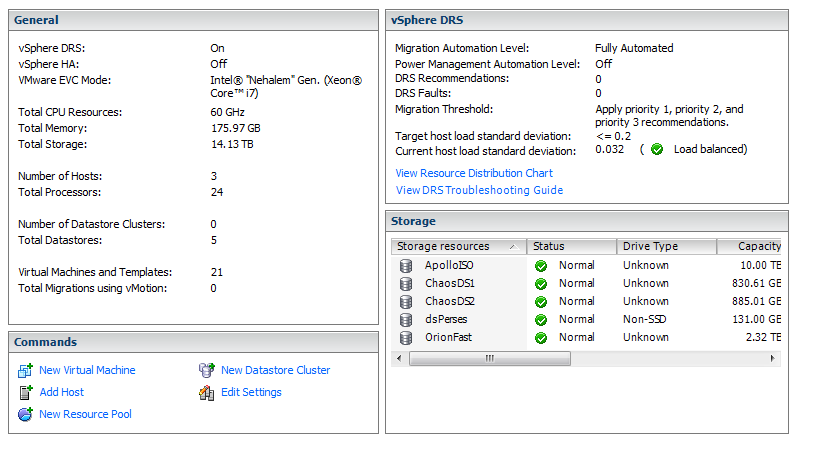

vSphere had no trouble recognizing the Intel NICs.

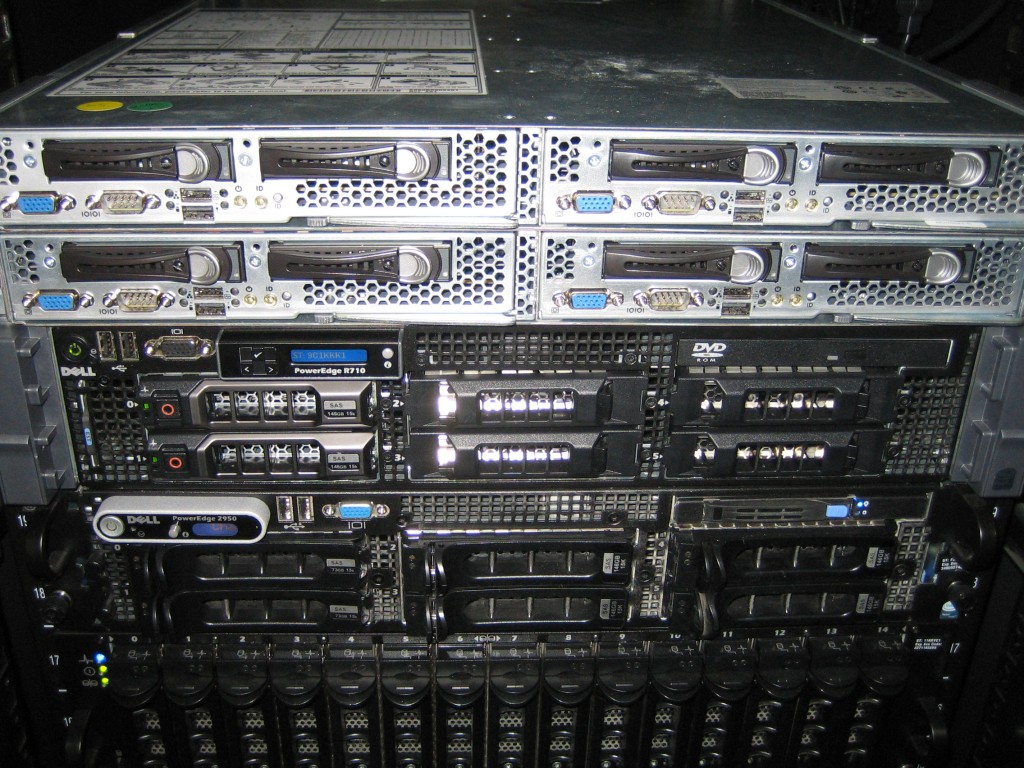

Server racked up. The other SR1680MV server will be operated on once my low profile network cards arrive.

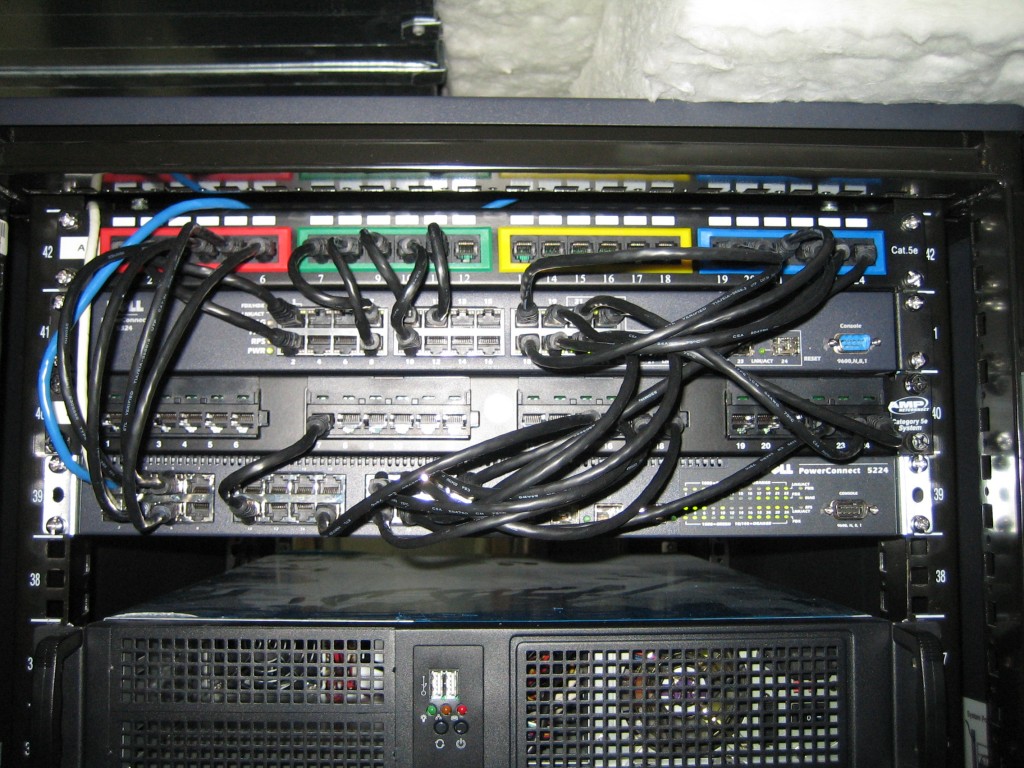

Network cables hooked up…

…and patched into the switches. The Dell 5324 takes care of SAN traffic. The Dell 5224 takes care of LAN/DMZ traffic. The networks are segmented on different VLAN’s too.

Noticed that after few minutes after bootup the LED’s on the front of the servers go off. I assume due to the custom nature of the servers, the LEDs have other meaning past bootup. It shouldn’t be to hard to add a power LED indicator as I saw that the nodes have an internal molex header where I can draw 5V from to power the LED. I’ll make this my next project.

Adding the server to the cluster was a snap. Will create some test VMs to stress these nodes for a few days.